bagging machine learning explained

ML Bagging classifier. If the classifier is unstable high variance then apply bagging.

A Primer To Ensemble Learning Bagging And Boosting

Ad Andrew Ngs popular introduction to Machine Learning fundamentals.

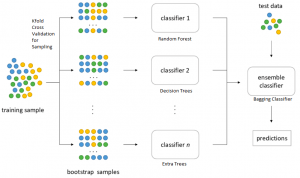

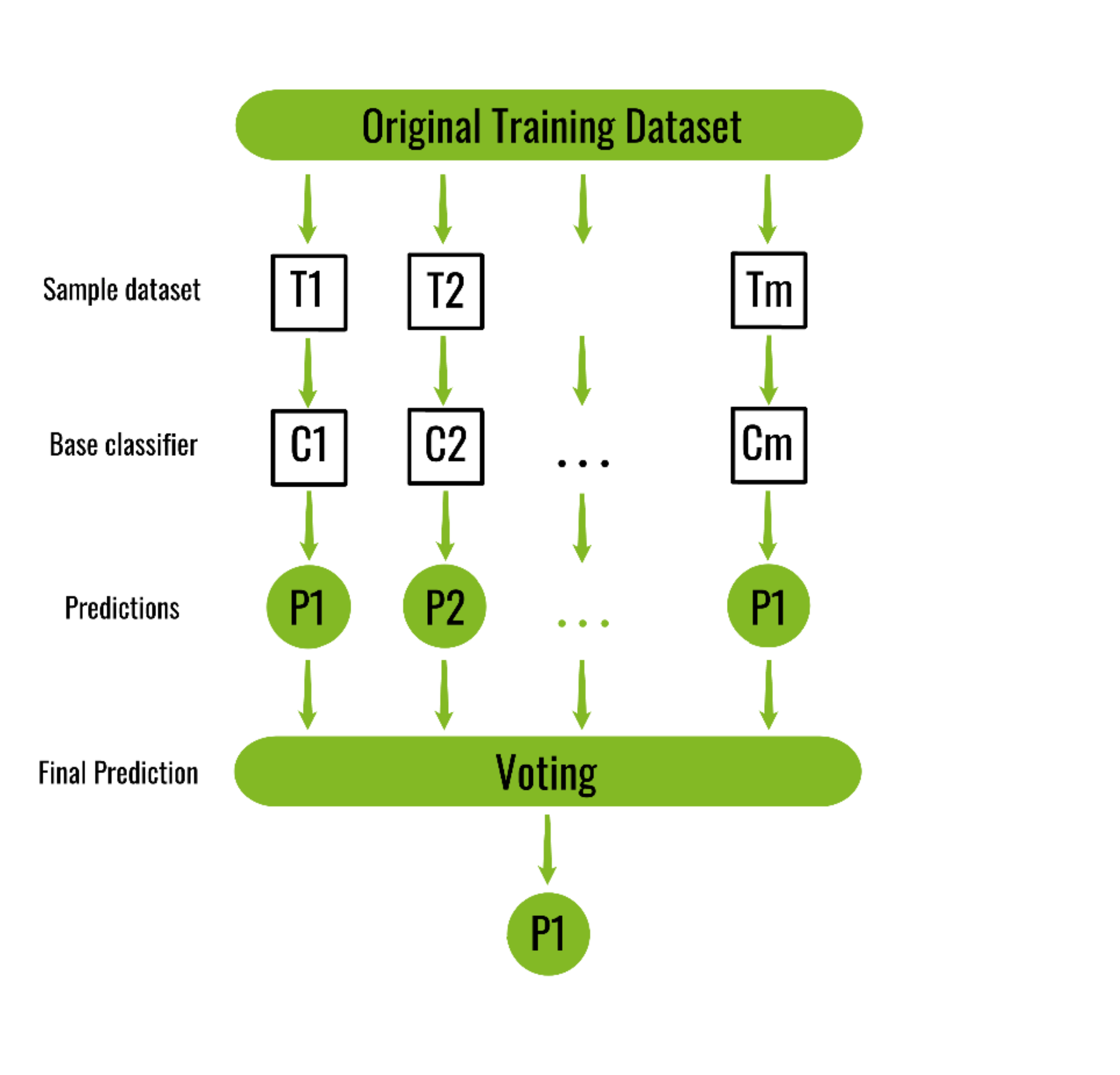

. If the classifier is stable and. A Bagging classifier is an ensemble meta-estimator that fits base classifiers each on random subsets of the original dataset and then aggregate their. Bootstrap Aggregation famously knows as bagging is a powerful and simple ensemble method.

Ad Andrew Ngs popular introduction to Machine Learning fundamentals. Bagging is usually applied where the classifier is unstable and has a high variance. Ad Machine Learning Capabilities That Empower Developers to Innovate Responsibly.

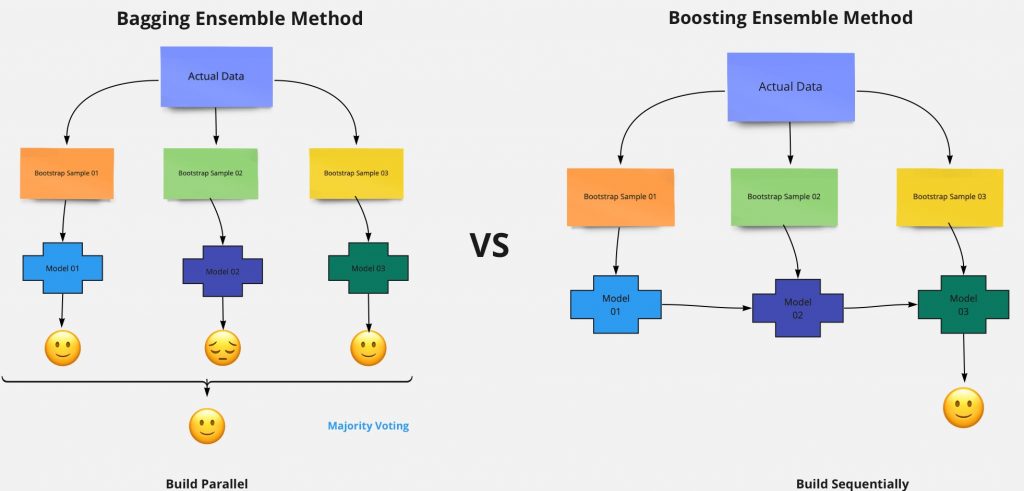

Bagging a Parallel ensemble method stands for Bootstrap Aggregating is a way to decrease the variance of the prediction model by generating. Bagging appeared first on Enhance Data Science. Difference Between Bagging And Boosting.

Get the Free eBook. The main two components of bagging technique are. Answer 1 of 16.

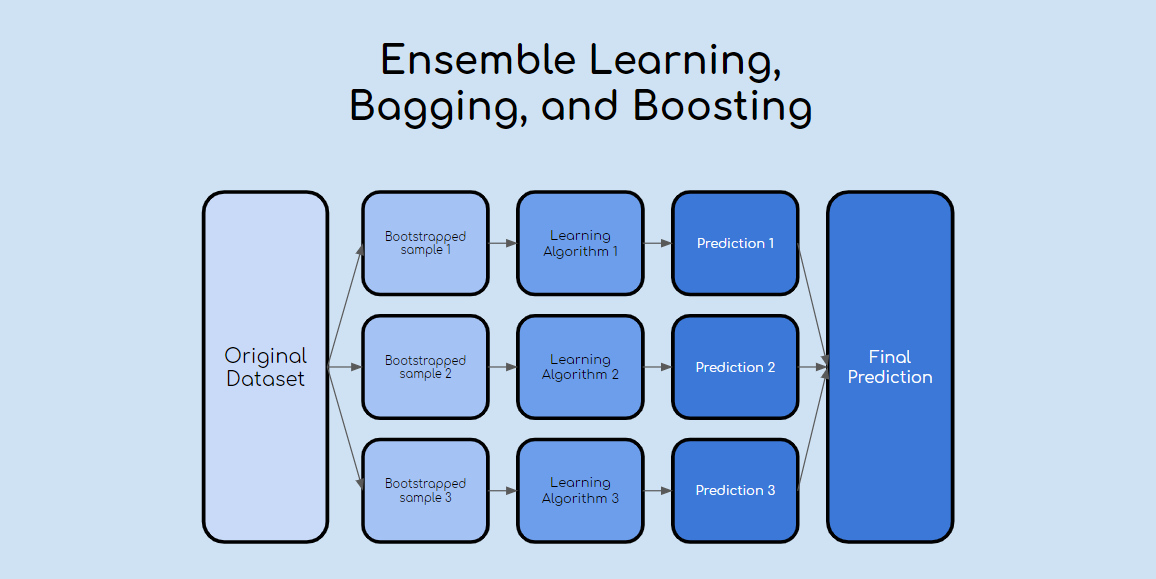

Ensemble learning is a machine learning paradigm where multiple models often called weak learners are trained to solve the same problem and combined to get better. Ensemble methods improve model precision by using a group of. The first possibility of building multiple models is building the same machine learning model multiple times with the same available train data.

All three are so-called meta-algorithms. As we said already Bagging is a method of merging the same type of predictions. Ad A Curated Collection of Technical Blogs Code Samples and Notebooks for Machine Learning.

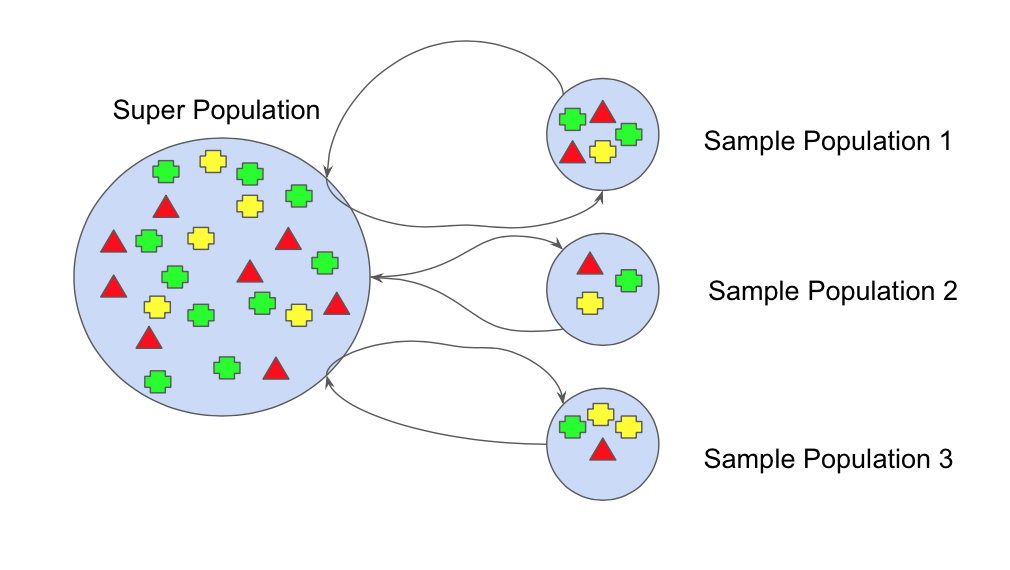

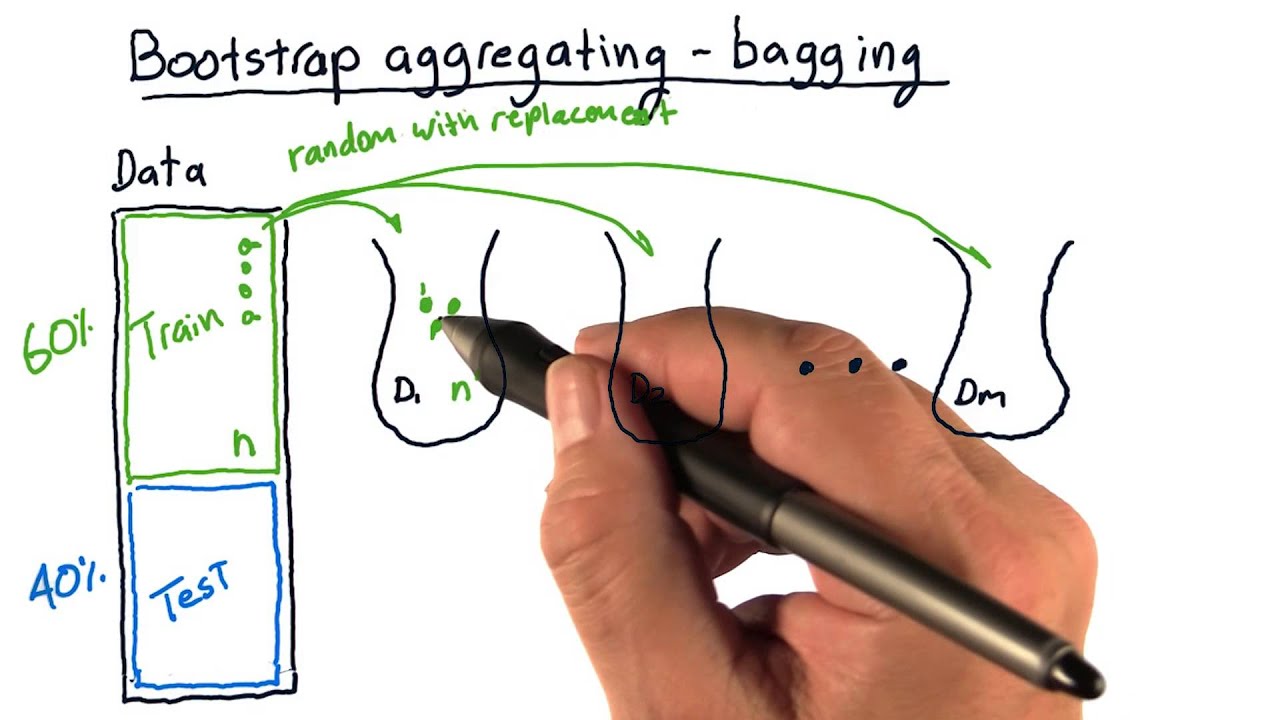

The bias-variance trade-off is a challenge we all face while training machine learning algorithms. The random sampling with replacement bootstraping and the set of homogeneous machine learning algorithms. Ensemble learning is a machine.

Bagging is a powerful ensemble method which helps to reduce variance and by extension. In bagging a random sample. Ad Learn key takeaway skills of Machine Learning and earn a certificate of completion.

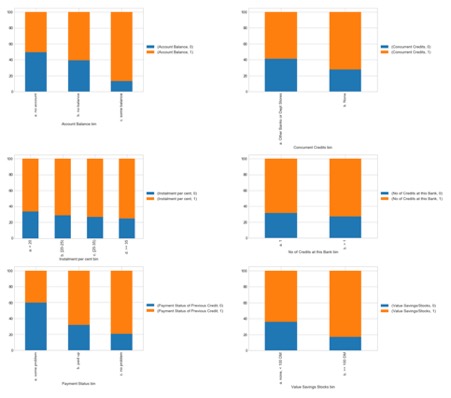

Bagging technique can be an effective approach to reduce the variance of a model to prevent over-fitting and to increase the. Bagging tries to solve the over-fitting problem. Bagging and Boosting are similar in that they are both ensemble techniques where a set of weak learners are combined to create a strong learner.

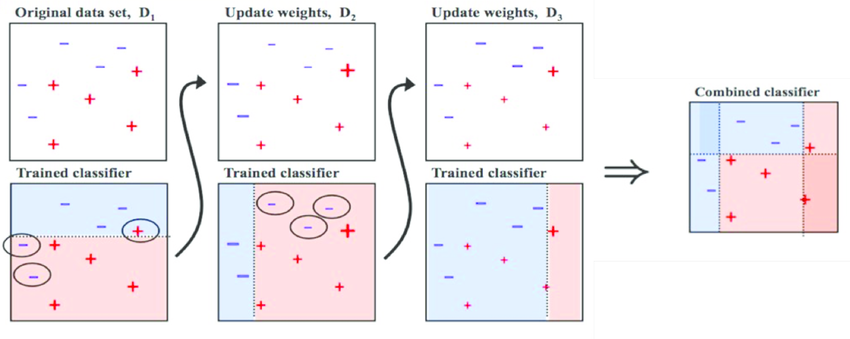

Dont worry even if we are. Take your skills to a new level and join millions that have learned Machine Learning. Boosting tries to reduce bias.

Boosting is usually applied where the classifier is stable and has a high bias. Bagging is used typically when you want to reduce the variance while retaining the bias. What they are why they are so powerful some of the different types and how they are.

Ensemble machine learning can be mainly categorized into bagging and boosting. Boosting Bagging and boosting are two main types of ensemble learning methods. The bagging technique is useful for both regression and statistical classification.

The post Machine Learning Explained. Bagging is a powerful ensemble method that helps to reduce variance and by extension prevent overfitting. In this post we will see a simple and intuitive explanation of Boosting algorithms in Machine learning.

Approaches to combine several machine learning techniques into one predictive model in order to decrease the variance bagging. Bagging and Boosting are the two popular Ensemble Methods. What are ensemble methods.

By xristica Quantdare. Bagging also known as bootstrap aggregation is the ensemble learning method that is commonly used to reduce variance within a noisy dataset. Bagging is the application of the Bootstrap procedure to a high-variance machine learning algorithm typically decision trees.

Lets assume we have a sample dataset of 1000. Machine Learning Models Explained. This happens when you average the predictions in different spaces of the input.

It is the technique to use. As highlighted in this study PDF 242 KB link resides outside IBM the. Find Machine Learning Use-Cases Tailored to What Youre Working On.

So before understanding Bagging and Boosting lets have an idea of what is ensemble Learning. Bagging is a powerful method to improve the performance of simple models and.

Ensemble Learning Bagging Boosting Ensemble Learning Learning Techniques Deep Learning

Bagging Vs Boosting In Machine Learning Geeksforgeeks

Bagging Classifier Python Code Example Data Analytics

Learn Ensemble Methods Used In Machine Learning

Bootstrap Aggregating Bagging Youtube

Guide To Ensemble Methods Bagging Vs Boosting

Bagging Ensemble Meta Algorithm For Reducing Variance By Ashish Patel Ml Research Lab Medium

Ensemble Learning Bagging And Boosting Explained In 3 Minutes

Ensemble Learning Explained Part 1 By Vignesh Madanan Medium

Difference Between Bagging And Random Forest Machine Learning Learning Problems Supervised Machine Learning

Bootstrap Aggregating Wikiwand

Bagging And Boosting Explained In Layman S Terms By Choudharyuttam Medium

A Bagging Machine Learning Concepts

What Is Bagging In Machine Learning And How To Perform Bagging

Ensemble Learning Bagging Boosting Stacking And Cascading Classifiers In Machine Learning Using Sklearn And Mlextend Libraries By Saugata Paul Medium

Bagging Vs Boosting In Machine Learning Geeksforgeeks

Ml Bagging Classifier Geeksforgeeks

Boosting And Bagging Explained With Examples By Sai Nikhilesh Kasturi The Startup Medium